This post is Part 4 in the series of posts on Garbage Collection (GC). Please see the index here.

Recap of definition

I’ll quickly repeat couple of definitions which are used in this post

- Object: This is a unit of storage on the heap. It generally means an object in the object oriented sense but is equally applicable to a procedural language (e.g. a struct/native-type in C) or functional language.

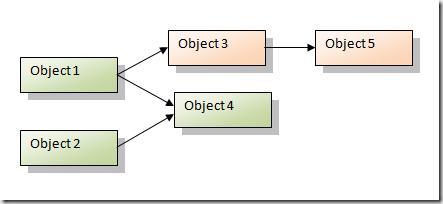

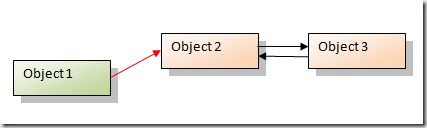

- Object/Reference graph: This is the directional graph of objects in memory. A typical sample is below. The nodes are objects in memory and the edges (arrows) are references one object holds to another (e.g. a pointer or reference in C/C++). There can be circular references between two nodes (Object 3 and Object 5) or nodes referencing themselves (Object 6).

- Roots: These are the set of nodes in the object graph from which the references start. These are typically references held in registers, local variable on the stack or global variables. The green nodes in the diagram above are roots.

- Unreachable object: These are nodes in the graph which have no edge referencing them. The Orange node in the diagram above is an unreachable object. This is the node that the GC needs to clean/free because it is not reachable from any node and is hence garbage memory.

Mark-sweep GC

In the last post I discussed reference counting GC which continually tracks memory and frees them the moment they go out of reference. Mark and sweep GC takes a very different approach.

In this scheme memory is not freed the moment they become garbage. Actually no subsystem is used to keep track of memory as they are being used.

When the system starts running out of memory (or some other such trigger) the GC is fired. It first enumerates all the roots and then starts visiting the objects referenced by them recursively (essentially travelling the nodes in the memory graph). When it reaches an object it marks it with a special flag indicating that the object is reachable and hence not garbage. At the end of this mark phase it gets into the sweep phase. Any object in memory that is not marked by this time is garbage and the system disposes it.

The algorithm

At the top level the algorithm in C like Pseudocode looks as follows

void GC()

{

HaltAllProcessing();

ObjectCollection roots = GetRoots();

for(int i = 0; i < roots.Count(); ++i)

Mark(roots[i]);

Sweep();

}

It has three phases, enumeration of roots, marking all object references starting from the root and finally sweeping them.

Root Enumeration

As I mentioned above the root enumeration lists all references held in registers, global or static fields, local variables on the stack, function arguments on stack, etc… The runtime system needs to provide ways for the GC to collect the list of roots. E.g. in the case of .NET the JIT maintains the information on the active roots and provides APIs to the GC to enumerate them. The system can also do some form of dynamic analysis to further optimize it. Say a function accepts a pointer argument. Now while JITing the jitter can figure out that the argument is not being used through out the method and can drop it from the list of roots the moment it is last accessed in the method.

Mark

Typically every object is given some sort of header as I mentioned in my last post. This header contains a bit flag which is used to “mark” that object. The Mark procedure is recursive and acts as follows

void Mark(Object* pObj)

{

if (!Marked(pObj)) // Marked returns the marked flag from object header

{

MarkBit(pObj); // Marks the flag in obj header

// Get list of references that the current object has

// and recursively mark them as well

ObjectCollection children = pObj->GetChildren();

for(int i = 0; i < children.Count(); ++i)

{

Mark(children[i]); // recursively call mark

}

}

}

This is a recursive algorithm with heavy dose of simplification used and we will re-visit this in later posts on with actual implementation implications.

The recursion termination criteria is

- Either a node doesn’t have any children (leaf node)

- If it is already marked which ensures that we do not land in infinite recursion for circular references

At the end of this marking phase every object that can be reached using a reference from the roots would have been visited and it’s marked bit set.

Sweep

Sweep starts by iterating through the entire memory and frees memory blocks that are not marked. It also clears the Mark bit so that subsequent GC passes can correctly mark/unmark them (resets the memory to pre-mark state).

void Sweep()

{

Object *pHeap = pHeapStart;

while(pHeap < pHeapEnd)

{

if (!Marked(pHeap))

Free(pHeap); // put it to the free object list

else

UnMarkBit(pHeap);

pHeap = GetNext(pHeap);

}

}

There are a bunch of assumptions here which has to be met. Some of them are that the

- Entire heap can be iterated over

- Both free memory blocks and allocated objects have the same sort of header that can be queried with Marked

- You can find the next memory block when given one block (GetNext implemented)

- There is some sort of free memory pool which tracks the list of free memory and you can add a block to it by calling Free(Object *)

Special memory layout is needed to handle this assumptions and I’ll try to cover how they are handled atleast in .NETCF in later post.

Compaction

Repeated allocation can actually fragment memory and slow allocation speed significantly (head over here for a nice story on this). So many systems actually combine sweep with a compaction. This ensures fragmentation reduces and hence subsequent allocations are faster. However, when memory is compacted objects move and hence all references to them has to be updated to point to the new location.

When is the GC run

At the face of it, it seems that GC should be run when memory allocation fails. However, when memory allocation fails there is a high chance that GC will fail as well because it would need some working memory to function which won’t be available. So in real world systems GC is fired under a variety of conditions like

- System is low on memory (enough for a GC to run but soon allocations are going to fail)

- Memory it too much fragmented

- After allocating a bunch of memory (e.g. .NET CF fires GC on allocating 1MB after the last GC)

This obviously varies from system to system and is carefully tuned to match the scenarios being supported.

Advantage and disadvantages

The primary advantage of mark-sweep is that it handles cyclic references naturally. Moreover, no additional overheads are added while normal execution (e.g. allocation, pointer manipulations). Combined with compaction it ensures good locality of reference and reduced fragmentation and hence optimal subsequent allocations.

However, mark-sweep pauses useful execution and walks entire memory marking and sweeping it. Hence it adds large freezes which is un-acceptable in most interactive systems. However, incremental variations of Mark-sweep are available which works around this limitation. Mark-sweep also starts thrashing when memory fills up as it fails to clean up memory and it gets triggered again and again as memory approaches exhaustion. Self tuning GC helps in this scenario.