Someone contacted me over my blog about his managed application where the working set goes on increasing and ultimately leads to out of memory. In the email at one point he states that he is using .NET and hence there should surely be no leaks. I have also talked with other folks in the past where they think likewise.

However, this is not really true. To get to the bottom of this first we need to understand what the the GC does. Do read up http://blogs.msdn.com/abhinaba/archive/2009/01/20/back-to-basics-why-use-garbage-collection.aspx.

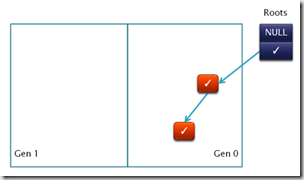

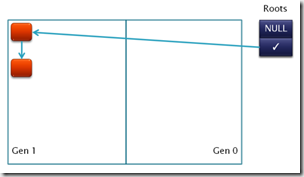

In summary GC keeps track of object usage and collects/removes those that are no longer referenced/used by any other objects. It ensures that it doesn’t leave dangling pointers. You can find how it does this at http://blogs.msdn.com/abhinaba/archive/2009/01/25/back-to-basic-series-on-dynamic-memory-management.aspx

However, there is some catch to the statement above. The GC can only remove objects that are not in use. Unfortunately it’s easy to get into a situation where your code can result in objects never being completely de-referenced.

Example 1: Event Handling (discussed in more detail here).

Consider the following code

EventSink sink = new EventSink();

EventSource src = new EventSource();

src.SomeEvent += sink.EventHandler;

src.DoWork();

sink = null;

// Force collection

GC.Collect();

GC.WaitForPendingFinalizers();

In this example at the point where we are forcing a GC there is no reference to sink (explicitly via sink = null ), however, even then sink will not be collected. The reason is that sink is being used as an event handler and hence src is holding an reference to sink (so that it can callback into sink.EventHandler once the src.SomeEvent is fired) and stopping it from getting collected

Example 2: Mutating objects in collection (discussed here)

There can be even more involved cases. Around 2 years back I saw an issue where objects were being placed inside a Dictionary and later retrieved, used and discarded. Now retrieval was done using the object key. The flow was something like

- Create Object and put it in a Dictionary

- Later get object using object key

- Call some functions on the object

- Again get the object by key and remove it

Now the object was not immutable and in using the object in step 3 some fields of that object got modified and the same field was used for calculating the objects hash code (used in overloaded GetHashCode). This meant the Remove call in step 4 didn’t find the object and it remained inside the dictionary. Can you guess why changing a field of an object that is used in GetHashCode fails it from being retrieved from the dictionary? Check out http://blogs.msdn.com/abhinaba/archive/2007/10/18/mutable-objects-and-hashcode.aspx to know why this happens.

There are many more examples where this can happen.

So we can conclude that memory leaks is common in managed memory as well but it typically happens a bit differently where some references are not cleared as they should’ve been and the GC finds these objects referenced by others and does not collect them.