About a month back we announced that in the next release of Windows Phone 7 (codenamed Mango) we will ship a new garbage collector in the CLR. This garbage collector (GC) is a generational garbage collector.

This post is a “back to basics” post where I’ll try to examine how a mark-sweep GC works and how adding generational collection helps in boosting it’s performance. We will take a simplified look at how mark-sweep-compact GC works and how generational GC can enhance it’s performance. In later posts I’ll try to elaborate on the specifics of the WP7 generational GC and how to ensure you get the best performance out of it.

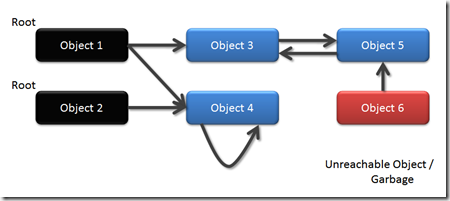

Object Reference Graph

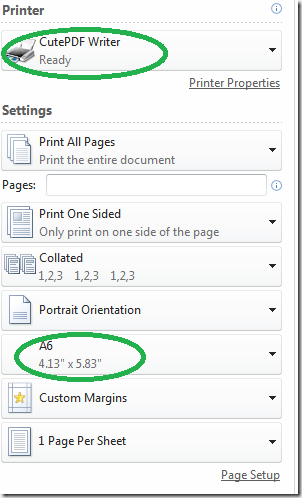

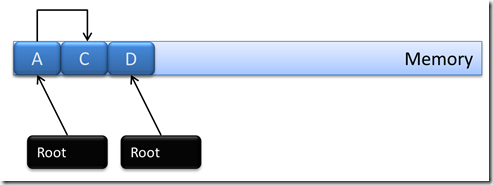

Objects in the memory can be considered to be a graph. Where every object is a node and the references from one object to another are edges. Something like

To use an object the code should be able to “reach” it via some reference. These are called reachable objects (in blue). Objects like a method’s local variable, method parameters, global variables, objects held onto by the runtime (e.g. GCHandles), etc. are directly reachable. They are the starting points of reference chains and are called the roots (in black).

Other objects are reachable if there are some references to them from roots or from other objects that can be reached from the roots. So Object4 is reachable due to the Object2->Object4 reference. Object5 is reachable because of Object1->Object3->Object5 reference chain. All reachable objects are valid objects and needs to be retained in the system.

On the other hand Object6 is not reachable and is hence garbage, something that the GC should remove from the system.

Mark-Sweep-Compact GC

A garbage collector can locate garbage like Object6 in various ways. Some common ways are reference-counting, copying-collection and Mark-Sweep. In this section lets take a more pictorial view of how mark-sweep works.

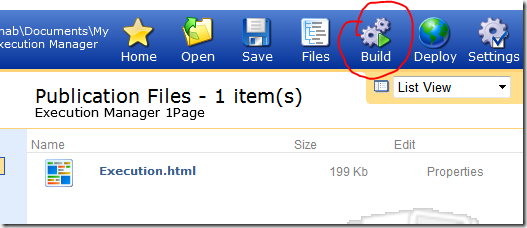

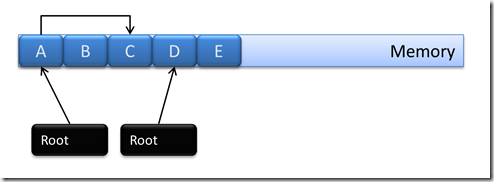

Consider the following object graph

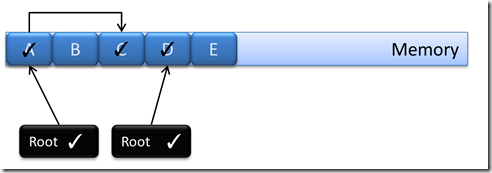

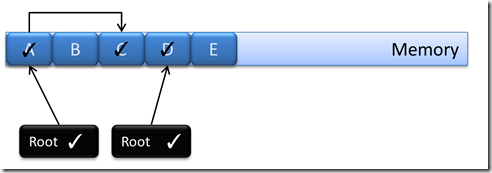

At first the GC pauses the entire application so that the object graph is not being mutated (as in no new objects or references are being created). Then it goes into the mark phase. In mark phase the GC traverses the graph starting at the roots and following the references from object to object. Each time it reaches an object through a reference it flips a bit in the object header indicating that this object is marked or in other words reachable (and hence not garbage). At the end everything looks as follows

So the 2 roots and the objects A, C, D are reachable.

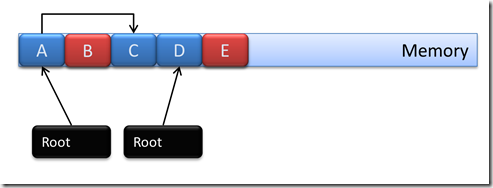

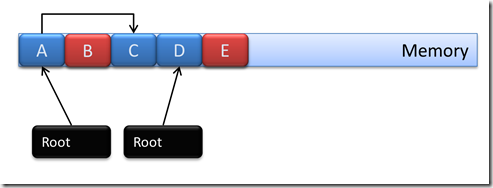

Next it goes into the sweep phase. In this phase it starts from the very first object and examines the header. If the header’s mark bit is set it means that it’s a reachable object and the sweep resets that bit. If the header’s bit is not set, it’s not reachable and is flagged as garbage.

So B and E gets flagged as garbage. Hence these areas are free to be used for other objects or can be released back to the system

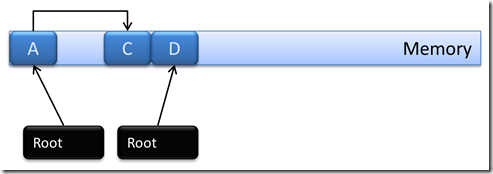

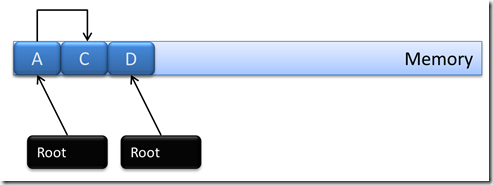

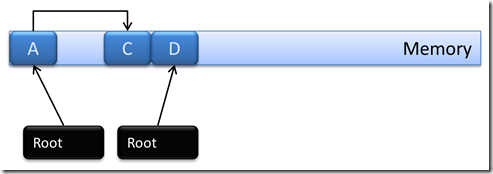

This is where the GC is done and it may resume the execution of the application. However, if there are too many of those holes (released objects) created in the system, then the memory gets fragmented. To reduce memory fragmentation. The GC may compact the memory by moving objects around. Do note that compaction doesn’t happen for every GC, it is run based on some fragmentation heuristics.

Both C and D is moved here to squeeze out the hole for B. At the same time all references to these objects in the system is also update to point to the correct location.

One important thing to note here is that unlike native objects, managed objects can move around in memory due to compaction and hence taking direct references to it (a.k.a memory pointers) is not possible. In case this is ever required, e.g. a managed buffer is passed to say the microphone driver native code to copy recorded audio into, the GC has to be notified that the corresponding managed object cannot be moved during compaction. If the GC runs a compaction and object moves during that microphone PCM data copy, then memory corruption will happen because the object being copied into would’ve moved. To stop that, GCHandle has to be created to that object with GCHandleType.Pinned to notify the GC that the corresponding object should never move.

On the WP7 the interfaces to these peripherals and sensors are wrapped by managed interfaces and hence the WP7 developer doesn’t really have to do these things, they are taken care offm under the hood by those managed interfaces.

The performance issue

As mentioned before during the entire GC the execution of the application is stopped. So as long as the GC is running the application is frozen. This isn’t a problem in general because the GC runs pretty fast and infrequently. So small latencies of the order of 10-20ms is not really noticeable.

However, with WP7 the capability of the device in terms of CPU and memory drastically increased. Games and large Silverlight applications started coming up which used close to 100mb of memory. As memory increases the number of references those many objects can have also increases exponentially. In the scheme explained above the GC has to traverse each and every object and their reference to mark them and later remove them via sweep. So the GC time also increases drastically and becomes a function of the net workingset of the application. This results in very large pauses in case of large XNA games and SL applications which finally manifests as long startup times (as GC runs during startup) or glitches during the game play/animation.

Generational Approach

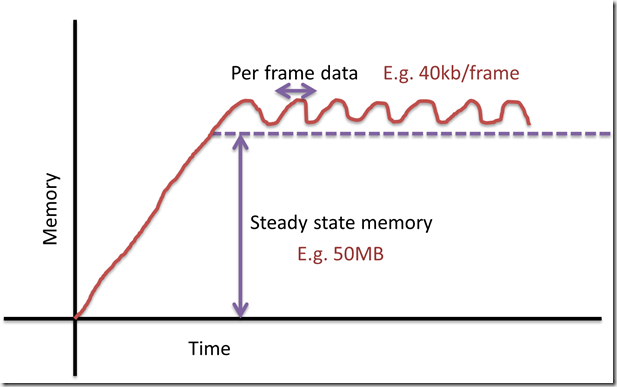

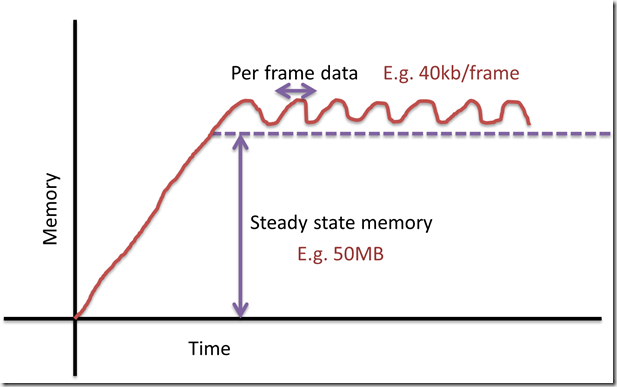

If we take a look at a simplified allocation pattern of a typical game (actually other apps are also similar), it looks somewhat like below

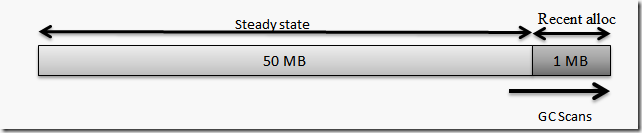

The game has a large steady state memory which contains much of it’s steady state data (which are not released) and then per-frame it goes on allocating/de-allocating some more data, e.g. for physics, projectiles, frame-data. To collect this memory layout the traditional GC has to walk or traverse the entire 50+ mb of data to locate the garbage in it. However, most of the data it traverses will almost never be garbage and will remain in use.

This application behavior is used for the Generational GC premise

- Most objects die young

- If an object survives collection (that is doesn’t die young) it will survive for a very long time

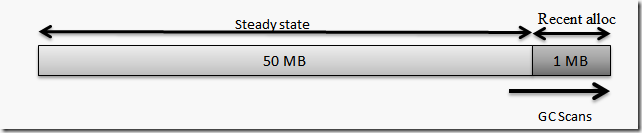

Using these premise the generational GC tries to segregate the managed heap into older and younger generations objects. The younger generation called Gen-0 is collected in each GC (premise 1), this is called the Ephemeral or Gen0 Collection. The older generation is called Gen-1. The GC rarely collects the Gen-1 as the probability of finding garbage in it is low (premise 2).

So essentially the GC becomes free of the burden of the net working set of the application.

Most GC will be ephemeral GC and it will only traverse the recently allocated objects, hence the GC latency remains very low. Post collection the surviving objects are promoted to the higher generation. Once a lot of objects are promoted, the higher generation starts becoming full and then a full collection is run (which collects both gen-1 and gen-0). However, due to premise 1, the ephemeral collection finds a lot of garbage in their runs and hence promotes very few objects. This means the growth rate of the higher generation is low and hence full-collection will run very infrequently.

Ephemeral/Gen-0 collection

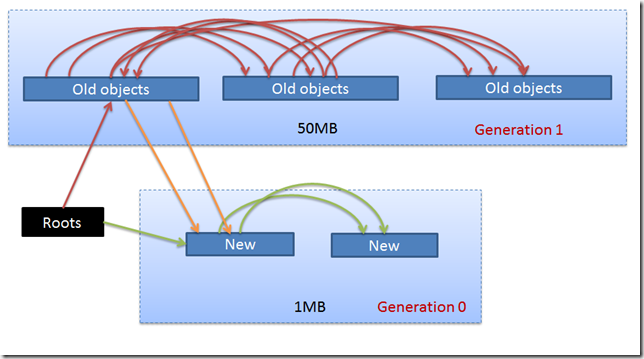

Even in ephemeral collection the GC needs to deterministically find all objects in Gen-0 which are not reachable. This means the following objects needs to survive a Gen-0 collection

- Objects directly reachable from roots

- Root –> Gen0 –> Gen-0 objects (indirectly reachable from roots)

- Objects referenced from Gen1 to Gen0

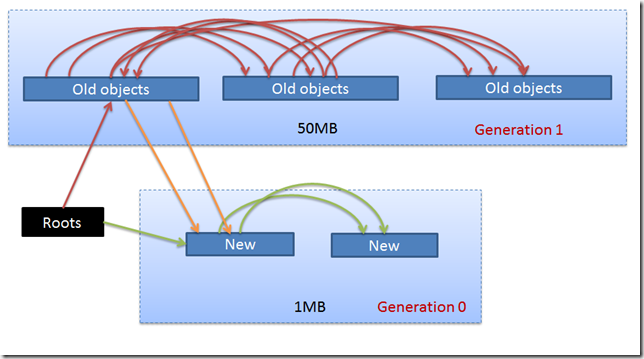

Now #1 and #2 pose no issues as in the Ephemeral GC, we will anyway scan all roots and Gen-0 objects. However to find objects from Gen1 which are referencing objects in Gen-0, we would have to traverse and look into all Gen1 objects. This will break the very purpose of having segregating the memory into generation. To handle this write-barrier+card-table technique is used.

The runtime maintains a special table called card-table. Each time any references are taken in the managed code e.g. a.b = c; the code JITed for this assignment also updates an internal data-structure called CardTable to capture that write. Later during the ephemeral collection, the GC looks into that table to find all the ‘a’ which took new references. If that ‘a’ is a gen-1 object and ‘c’ a gen-0 object then it marks ‘c’ recursively (which means all objects reachable from ‘c’ is also marked). This technique ensures that without examining all the gen-1 objects we can still find all live objects in Gen-0. However, the cost paid is

- Updating object reference is a little bit more costly now

- Making old object taking references to new objects increases GC latency (more about these in later posts)

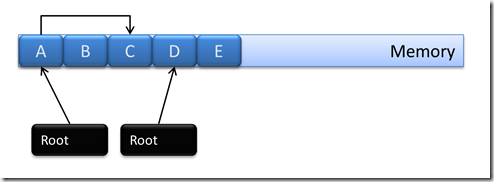

Putting it all together, the traditional GC would traverse all references shown in the diagram below. However, an ephemeral GC can work without traversing the huge number of Red references.

It scans all the Roots to Gen-0 references (green) directly. It traverses all the Gen1->Gen0 references (orange) via the CardTable data structure.

Conclusion

- Generational GC reduces the GC latency by avoiding looking up all objects in the system

- Most collections are gen-0 or ephemeral collection and are of low latency this ensures fast startup and low latency in game play

- However, based on how many objects are being promoted full GC’s are run sometimes. When they do, they exhibit the same latency as a full GC on the previous WP7 GC

Given the above most applications will see startup and in-execution perf boost without any modification. E.g today if an application allocates 5 MB of data during startup and GC runs after every MB of allocation then it traverses 15mb (1 + 2 + 3 + 4 + 5). However, with GenGC it might get away with traversing as low as only 5mb.

In addition, especially game developers can optimize their in-gameplay allocations such that during the entire game play there is no full collection and hence only low-latency ephemeral collections happens.

How well the generational scheme works depend on a lot of parameters and has some nuances. In the subsequent posts I will dive into the details of our implementation and some of the design choices we made and what the developers needs to do to get the most value out of it.