Bong Geek - Abhinaba Basu

Bong is an affectionate slang for people from Bengal (where I am from)

Search

Monday, November 28, 2022

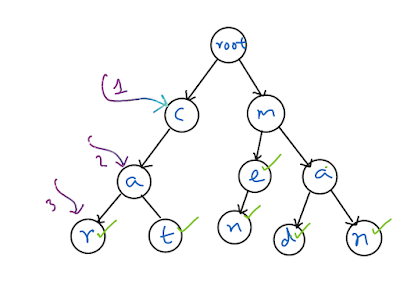

Wordament Solver

Wednesday, March 02, 2022

CAYL - Code as you like day

There will be no scheduled work items and no standups.

We simply do stuff we want to do. Examples include but not limited to

- Solve a pet peeve (e.g. fix a bug that is not scheduled but you really want to get done)

- A cool feature

- Learn something related to the project that you always wanted to figure out (how do we use fluentd to process events, what is helm)

- Learn something technical (how does go channels work, go assembly code)

- Shadow someone from a sibling team and learn what they are working on

I would say we have had great success with it. We have had CAYL projects all over the spectrum

- Speed up build system and just make building easier

- ML Vision device that can tell you which bin trash needs to go in (e.g. if it is compostable)

- Better BVT system and cross porting it to work on our Macs

- Pet peeves like make function naming more uniform, remove TODO from code, spelling/grammar etc.

- Better logging and error handling

- Fix SQL resiliency issues

- Move some of our older custom management VMs move to AKS

- Bring in gomock, go vet, static checking

- 3D game where mommy penguin gets fish for her babies and learns to be optimal using machine learning

- Experiment with Prometheus

- A dev spent a day shadowing dev from another team to learn the cool tech they are using etc.

Monday, February 07, 2022

Go Generics

Every month in our team we do a Code as You Like Day, which is basically a day of taking time off regular work and hacking something up, learning something new or even fixing some pet-peeves in the system. This month I chose to learn about go-lang generics.

I started go many years back while coming from mainly coding in C++ and C#. Also in Adobe almost 20 years back I got a week long class on generic programming from Alexander Stepanov himself. I missed generics terribly and hated all the code I had hand role out for custom container types. So I was looking forward to generics in go.

This was also the first time I was trying to use a non-stable version of go as generics is available currently as go 1.18 Beta 2. Installing this was a bit confusing for me.

I just attempted go install which seemed to work

Tuesday, June 16, 2020

Raspberry Pi Photo frame

This small project brings together bunch of my hobbies together. I got to play with carpentry, photography and software/technology including face detection.

I have run out of places in the home to hang photo frames and as a way around I was planning to get a digital photo frame. When I upgraded my home desktop to 2 x 4K monitors I had my old dell 28" 1080p monitor lying around. I used that and a raspberry pi to create a photo frame. It boasts of the following features

- A real handmade frame

- 1080p display

- Auto sync from OneDrive

- Remotely managed

- Face detection based image crop

- Low cost (uses raspberry pi)

Construction

In my previous project of smart-mirror, I focused way too much on the framing monitor part and finally had the problem that the raspberry-pi and the monitor is so well contained inside the frame that I have a hard time accessing it and replacing stuff. So this time my plan was to build a simple lightweight frame that is put on the monitor using velcro fasteners so that I can easily remove the frame. The monitor is actually on its own base, so the frame is just cosmetic and doesn't bear the load of the monitor. Rather the monitor and its base holds the frame in place.

In my previous project of smart-mirror, I focused way too much on the framing monitor part and finally had the problem that the raspberry-pi and the monitor is so well contained inside the frame that I have a hard time accessing it and replacing stuff. So this time my plan was to build a simple lightweight frame that is put on the monitor using velcro fasteners so that I can easily remove the frame. The monitor is actually on its own base, so the frame is just cosmetic and doesn't bear the load of the monitor. Rather the monitor and its base holds the frame in place.On the back of the frame I attached a small piece of wood, on which I added velcro. I also glued velcro to the top of the monitor. These two strips of velcro keeps the frame on the monitor.

Now the frame can be attached loosely to the monitor just by placing on it.

After that I got a raspberry-pi and connected it to the monitor using hdmi cable and attached the raspberry pi with zip ties to the frame. All low tech till this point.

On powering up, it boots into Raspbian.

Software

Base Setup

Push Pics

I use FrameMaker for managing photos I take. My workflow for this case is as follows- All images are tagged with keyword "frame" in lightroom.

- I use smart folder to see all these images and then publish to a folder named Frame in OneDrive

Sync OneDrive to Raspberry Pi

- curl -L https://raw.github.com/pageauc/rclone4pi/master/rclone-install.sh | bash

- rclone config

- Enter n (for a new connection) and then press enter

- Enter a name for the connection (i’ll enter onedrive) and press enter

- Enter the number for One Drive

- Press Enter for client ID

- Press Enter for Client Secret

- Press n and enter for edit advanced config

- Enter y for auto config

- A browser window will now open, log in with your Microsoft Account and select yes to allow OneDrive

- Choose right option for OneDrive personal

- Now select the OneDrive you would like to use, you will probably only have one OneDrive linked to your account. This will be 0

- Y for subsequent questions

- To Sync once: rclone sync -v onedrive:Frame /home/pi/frame

- Setup automatic sync every one hour

- echo "rclone sync -v onedrive:Frame /home/pi/frame" > ~/sync.sh

- chmod +x ~/sync.sh

- crontab -e

- Add the line: 1 * * * * /home/pi/sync.sh

Setup Screensaver

- Disable screen blanking after some time of no use

- vi /etc/lightdm/lightdm.conf

- Addd the line[SeatDefaults]

xserver-command=X -s 0 -dpms - Enable auto-login, so that on restart you directly get logged in and then into screensaver

- sudo raspi-config

- Select

'Boot Options' then 'Desktop / CLI' then 'Desktop Autologin'. Then right arrow

twice and Finish and reboot.

- Setup screen saver

- sudo apt-get -y install xscreensaver

- sudo apt-get -y install xscreensaver-gl-extra

These are my screen saver settings to show the photos in /home/pi/frame as slideshow

Problems and solving with Face Detection

Monday, June 15, 2020

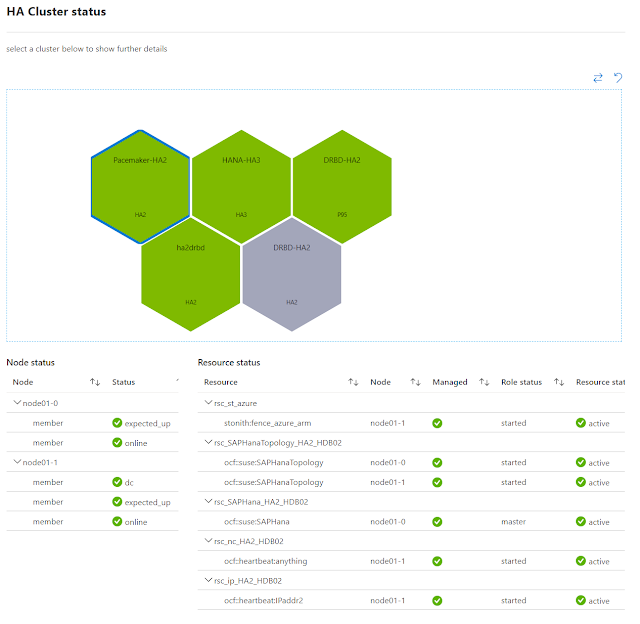

Building Azure Monitor for SAP Solutions

The Product

Update: Here is a quick-start video"Azure Monitor for SAP Solutions" provides managed monitoring for the databases powering customer's SAP landscapes. Our monitoring supports multiple instances of databases of a particular type (e.g. HANA) and is also extendable for various kinds of databases. We have started with HANA and plan to include SQL-Server, etc. in the future. At the time of writing this post the monitoring is in private-preview with public preview coming up "soon".

In the screenshot below the visualization shows all database clusters of our test cluster at the same place. Selecting any cluster further drills down into each DB node health.

Similarly in the following visualization we see a cluster is unhealthy and then on drilling down a node is yellow (warning state) because it is triggering our high CPU usage threshold (>50%).

Architecture

If the architecture seems familiar it is because a large part of it is shared with how we manage BareMetal blades running in memory databases (HANA) in Azure and I have posted about that here.

The regional Resource Provider or RP

Deployment

- The RP creates various networking components (NSG, NIC)

- Creates storage account, storage queues

- Uses KeyVault to deploy DB access secrets. These are not stored by us, they remain encrypted in transit and in rest inside customer owned KeyVault

- Creates log-analytics workspace

- Creates collector VM in the resource group (a VM of type B2ms)

- The VM uses custom script extension to bootstrap docker and pulls down the monitoring payload docker image

The Payload

The commands use to install, launch and manage individual sub-monitors is in sapmon.py. Specific payloads are in say saphana.py or other files in that folder.

Our payload VM fetches the docker image built out of these sources from our Azure container repository from the following location

mcr.microsoft.com/oss/azure/azure-monitor-for-sap-solutions

Scalability

Tuesday, May 05, 2020

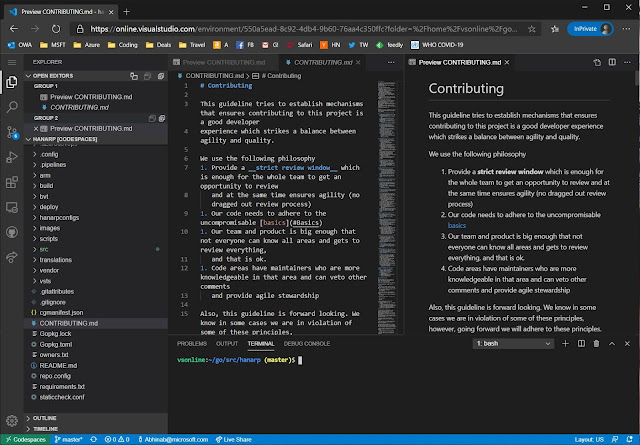

Using Visual Studio Codespaces

One of the pain points we face with remote development is having to go through few extra hops to get to our virtual dev boxes. Many of us uses Azure VMs for development (in addition to local machines) and our security policy is to lock down all VMs to our Microsoft corporate network.

So to ssh or rdp into an Azure VM for development, we first connect over VPN to corporate network, then use a corpnet machine to then login to the VMs. That is painful and more so now when we are working remotely.

This is where the newly announced Visual Studio Codespaces come in. Basically it is a hosted vscode in the cloud. It runs beautifully inside a browser and best of all comes with full access to the linux shell underneath. Since it is run as a service and secured by the Microsoft team building it, we can simply use it from a browser on any machine (obviously over two-factor authentication).

At the time of writing this post, the cost is around $0.17 per hour for 4 core/8GB which brings the price to around $122 max for the whole month. Codespaces also has a snooze feature. I use snooze after one hour of no usage. This does mean additional startup time when you next login, but saves even more money. In between snooze the state of the box is retained.

While just being able to use the IDE on our code base is cool in itself, having access to the shell underneath is even cooler. Hit Ctrl+` in vscode to bring up the terminal window.

I then sync'd my linux development environment from https://github.com/abhinababasu/share, installed required packages that I need. Finally I have a full shell and IDE in the cloud, just the way I want it.

To try out Codespaces head to https://aka.ms/vso-login

Sunday, April 26, 2020

Managing Baremetal blades in Azure

In this post I give a brief overview of how we run the control plane of BareMetal Compute in Azure that powers the SAP HANA Large instances (in memory database running on extreme high memory machines). We support different types of BareMetal blades that go all the way up to 24TB RAM including special memory support like Intel Optane (persistent memory) and unlike Virtual Machines, customer get full access to the BareMetal physical machine with root access but still behind network level security sandboxing.

Few years back when we started the project we faced some daunting challenges. We were trying to get custom build SAP HANA certified bare-metal machines into Azure DCs, fit them in standard Azure racks and then manage them at scale and expose control knobs to customer inside Azure Portal. These are behemoths going up to 24 TB ram and based on size different OEMs were providing us the blades, storage, networking gear and fiber-channel devices.

We quickly realized that most of the native Azure native compute stack will not work because they are built with design assumptions that do not hold for us.

- Azure fleet nodes or blades are built to Microsoft specification and have common denominator management API surface and monitoring, but we were bringing in disparate externally certified HW that did not meet us there

- Our model needed to provide customer with full root access to the bare metal blades and they were not isolated across an hyper-visor

- The allocator and other logic in Azure was not location aware. E.g. We had custom NetApp storage literally placed beside the high memory compute for very low latency, high throughput usage that is required by the SAP HANA in memory databases

- We had storage and networking requirements in terms of uptime, latency and throughput that were not met by standard Azure storage, latency and hence we had to build our own.

- We differed in basic layout from Azure, e.g. our blades do not have any local storage and everything runs off remote storage, we had different NW topology (many HW NICs per blade with very different I/O requirements).

- Be frugal on resourcing

- Rely on external services instead of trying to build in-house

- Design for maintainability

Architecture

Customer Experience

The customer interacts with out system using either the Azure Portal (screenshot above), the command line tools or the SDK. We build extensions to the Azure portal for our product sub-area. All resources in azure is exposed using standardized RESTful APIs. We publish the swagger spec here and the CLI and SDK is generated out of those.

The regional Resource Provider or RP

We pump both metrics (hot-path) and logs (warm path) into our Azure wide internal telemetry pipeline called Jarvis. We then add backend alerts and dashboards on the metrics for near realtime alerting and in some cases also on the logs (using logs-to-metrics). The data is also digested into Azure Kusto (aka Data Explorer) which is a log analytics platform. The alerts tells us if something has gone wrong (severity two and above alerts ring on-call phones) and then we use the logs in Jarvis or Kusto queries to debug.

Also it's good to call out that all control flow across pods and across the services is encrypted in transit over nginx and linkerd. The data that we store is SQL Server is also obviously encrypted in transit and at rest.

Tech usage: AKS, Kubernetes, linkerd, nginx, helm, linux, Docker, go-lang, Python, Azure Data-explorer, Azure service-bus-queue, Azure SQL Server, Azure DevOps, Azure Container Repository, Azure Key-vault, etc.

Cluster Manager

We have a cluster-manager (CM) per compute cluster. The cluster manager runs on AKS. The AKS vnet is connected over Azure Express Route into the management VLAN for the cluster that contains all our compute, storage and networking devices. All of these devices are in a physical cluster inside Azure Data-center.We wanted to ensure that the design is such that the cluster manager can be implemented with a lot of versatility and can evolve without dependency on the BmRP. The reason is we imagined one day the cluster-manager could also run inside remote locations (edge sites) and we weren't sure if we can call into the CM from outside or have some other sort of persistent connectivity. So we chose Azure Service Bus Queue for communication using simple json command response going between BmRP and CM. This only requires that the CM can make GET calls on the SBQ end point and nothing more to talk to BmRP.

The cluster manager has two major functions

- Provide a device agnostic control abstraction layer to the RP

- Monitor various devices (compute, storage and NW) in the cluster

Abstraction

Telemetry and Monitoring

We use a fluentd based pipeline for actively monitoring all devices in the cluster. We use active monitoring, which means that not only we listen onto the various events generated by the devices in the cluster, we also call into these devices to get more information.The devices in our cluster uses various types of event mechanism. We configure these various types of events like syslog, snmp, redfish events to be sent to the load-balancer of the AKS cluster. When one of the fluentd end-points get the event it is send through a series of input plugins. Some of the plugins filter out noisy events we do not care about, some of the plugins call back into the device that generated the event to get more information and augment the event.

Finally the output plugins send the result into our Jarvis telemetry pipeline and other destinations. Many of the plugins we use or have built are open sourced, like here, here, here and here. Some of the critical event like blade power-state change (reboot) is sent back also through the service-bus-queue to the BmRP so that it can store blade power-state information in our database.

Generally we rely on events being sent from devices to the fluentd end point for monitoring. Since many of these events come over UDP and the telemetry pipeline itself is running on a remote (from the actual devices) AKS cluster we expect some of the events to get dropped. To ensure we have high reliability on these events, we also hence have backup polling. The cluster manager in periodic intervals reaches out to the equipment in the cluster using whatever APIs those equipment support to get their status and fault-state (if any). Between the events and backup polling we have both near real-time as well as reliable coverage.

Whether from events or from polling all telemetry is sent to our Jarvis pipeline. We then have Jarvis alerts configured on these events. E.g. if a thermal event occurs and either storage nodes or blade's temperature goes over a threshold the alert will fire. Same thing for cases like a blade crashing due to HW issues.

Tech-user: AKS, Kubernetes, linux, Docker, fluentd, Ruby, go-lang, Python, Azure service-bus-queue, snmp, ipmi, Redfish, ONTAP, syslog, etc.

Scaling and Reliability

Monday, February 17, 2020

System Engineering Guidelines

- Close on broad design before sending PRs.

- Add design as markdown to root of the feature code path and discuss it as a PR. For broad cross RP feature it is ok to place it in the root

- For sizable features please have a design meeting

- Requirement for a design meeting is to send a pre-read and expectation is all attendees have reviewed the pre-read before coming in

- Be aware of distributed system quirks

- Think CAP theorem. This is a distributed system, network partition will occur, be explicit about your availability and consistency model in that event

- All remote calls will fail, have re-tries that uses exponential back-off. Log warning on re-tries and error if it finally fails

- Ensure we always have consistent state. There should be only 1 authoritative version of truth. Having local data that is eventually consistent with this truth is acceptable. Know the max time-period for eventual consistency

- System needs to be reliable, scalable and fault tolerant

- Always avoid SPOF (Single Point of Failure), even for absolutely required resources like SQLServer consider retrying (see below), gracefully fail and recover

- Have retries

- APIs need to be responsive and return in sub second for most scenarios. If something needs to take longer, immediately return with a mechanism to track progress on the background job started

- All API and actions we support should have a 99.9 uptime/success SLA. Shoot for 99.95

- Our system should be stateless (have state elsewhere in data-store) and designed to be cattle and not pets

- Systems should be horizontally scalable. We should be able to simply add more nodes to a cluster to handle more traffic

- Choose to use a managed service over attempting to build it or deploy it in-house

- Treat configuration as code

- Breaks due to out of band config changes are too common. So consider config deployment the same way as code deployment (Use SCD == Safe Config/Code Deployment)

- Config should be centralized. Engineers shouldn't be hunting around to look for configs

- All features must have feature flag in config.

- The feature flag can be used to disable features in per region basis

- Once a feature flag is disabled the feature should cause no impact to the system

- Try to make sure your system works on a single boxThis makes dev-test significantly easier. Mocking auxiliary systems is OK

- Never delete things immediately

- Don't delete anything instantaneously, especially data. Tombstone deleted data away from user view

- Keep data, metadata, machines around for a garbage collector to periodically delete at configurable duration.

- Strive to be event driven

- Polling is bad as the primary mechanism

- Start with event driven approach and have fallback polling

- Have good unit tests.

- All functionality needs to ship with tests in the same PR (no test PR later)

- Unit test tests functionality of units (e.g. class/modules)

- They do not have to test every internal functions. Do not write tests for tests' sake. If test covers all scenarios exposed by an unit, it is OK to push back on comments like "test all methods".

- Think what does your unit implement and can the test validate the unit is working after any changes to it

- Similarly if you add a reference to an unit from outside and depend on a behavior consider adding a test to the callee so that changes to that unit doesn’t break your requirements

- Unit test should never call out from dev box, they should be local tests only

- Unit test should not require other things to be spun up (e.g. local SQL server)

- Consider adding BVT to scenarios that cannot be tested in unit tests.

E.g. stored procs need to run against real SqlDB deployed in a container during BVT, or test query routing that needs to run inside a web-server - All required tests should be automatically run and not require humans to remember to run them

- Test in production via our INT/canary clusterSomethings simply cannot be tested on dev setup as they rely on real services to be up. For these consider testing in production over our INT infra.

- All merges are automatically deployed to our INT cluster

- Add runners to INT that simulate customer workloads.

- Add real lab devices or fake devices that test as much as possible. E.g. add fake snmp trap generator to test fluentd pipeline, have real blades that can be rebooted using our APIs periodically

- Bits are then deployed to Canary clusters where there are real devices being used for internal testing, certification. Bake bits in Canary!

- All features should have measurable KPIs and metrics.

- You must add metrics against new features. Metrics should tell how well your feature is working, if your feature stops working or if any anomaly is observed

- Do not skimp on metrics, we can filter metrics on the backend rather than not having them fired

- Copious logging is required.

- Process should never fail silently

- You must add logs for both success and failure paths. Err on the side of too much logging

- Do not rely on text logs to catch production issues.

- You cannot rely on too many error logs from a container to catch issues. Have metrics instead (see above)

- Logs are a way to root-cause and debug and not catch issues

- Consider on-call for all development

- Ensure you have metrics and logs

- Ensure you write good documentation that anyone in the team can understand without tons of context

- Add alerts with direct link to TSGs

- Add actionable alerts where the on-call can quickly mitigate

- On-call should be able to turn off specific features in case it is causing problems in production

- All individual merges can be rolled back. Since you cannot control when code snap for production happens the PRs should be such that it can be individually rolled back

Friday, February 14, 2020

The C Word - Part 2

Our confrontation with Lymphoma started in 2011, when the C word entered our life and my wife got diagnosed with Stage 4 Hodgkins sclerosing lymphoma. We have fought through and continue to do so. Even through she is in remission now, the shadow of Cancer still hangs over.

We had just moved across the world from India to the US in 2010 and had no family and very few friends around. We had to mostly duke it out ourselves with little support. We were able to get the best treatment available in the world through the Fred Hutch and Seattle Cancer Care Alliance. However, we do realize not everyone is fortunate to be able to do so.

Our daughter has decided to do her part now and raise funds through the Lymphoma and Leukemia Society. If you'd like to help her please head to

https://events.lls.org/wa/SOYSeattle20/pbasu

Sunday, January 19, 2020

Chobi - Face Detection Based Static Image Gallery Generator

The Problem

You see thumbnail generation from image has a major problem. I needed the gallery generator to create square thumbnails for the image strip shown at the bottom of the page. However, the generated thumbnails would simply be either from the center or some other arbitrary location. This meant that the thumbnails would cut off at weird places.

The Solution

Sources

- Chobi sources are at https://github.com/abhinababasu/chobi

- It uses the face-detected thumbnail generator which I wrote at https://github.com/abhinababasu/facethumbnail.

- That is in turn is based out of a face detection library pigo written fully in go