This post is Part 8 in the series of posts on Garbage Collection (GC). Please see the index here.

One of the primary disadvantage discussed in the post on mark-sweep garbage collection is that it introduces very large system pauses when the entire heap is marked and swept. One of the primary optimization employed to solve this issue is employing generational garbage collection. This optimization is based on the following observations

- Most objects die young

- Over 90% garbage collected in a GC is newly created post the previous GC cycle

- If an object survives a GC cycle the chances of it becoming garbage in the short term is low and hence the GC wastes time marking it again and again in each cycle

The optimization based on the above observations is to segregate objects by age into multiple generations and collect each with different frequencies.

This scheme has proven to work rather well and is widely used in many modern systems (including .NET).

Detailed algorithm

The objects can be segregated into age based generations in different ways, e.g. by time of creation. However one common way is to consider a newly created object to be in Generation 0 (Gen0) and then if it is not collected by a cycle of garbage collection then it is promoted to the next higher generation, Gen1. Similarly if an object in Gen1 survives a GC then that gets promoted to Gen2.

Lower generations are collected more often. This ensures lower system pauses. The higher generation collection is triggered fewer times.

How many generations are employed, varies from system to system. In .NET 3 generations are used. Here for simplicity we will consider a 2 generation system but the concepts are easily extended to more than 2.

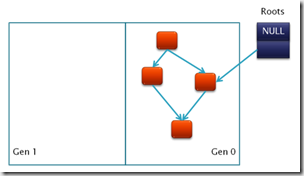

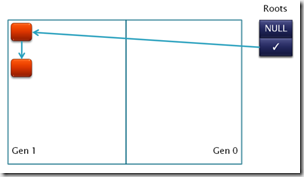

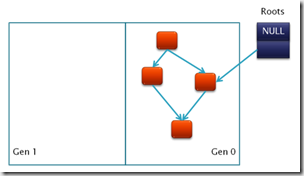

Let us consider that the memory is divided into two contiguous blocks, one for Gen1 and the other for Gen0. At start memory is allocated only from Gen0 area as follows

So we have 4 objects in Gen0. Now one of the references is released

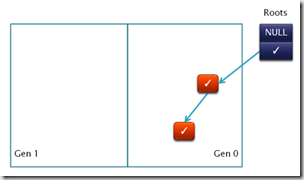

Now if GC is fired it will use mark and sweep on Gen0 objects and cleanup the two objects that are not reachable. So the final state after cleaning up is

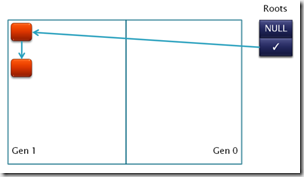

The two surviving objects are then promoted to Gen1. Promotion includes copying the two objects to Gen1 area and then updating the references to them

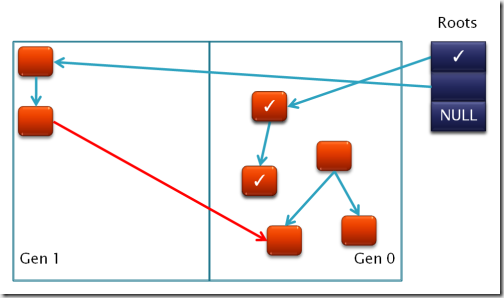

Now assume a whole bunch of allocation/de-allocation has happened. Since new allocations are in Gen0 the memory layout looks like

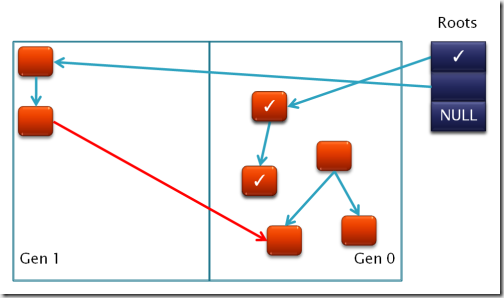

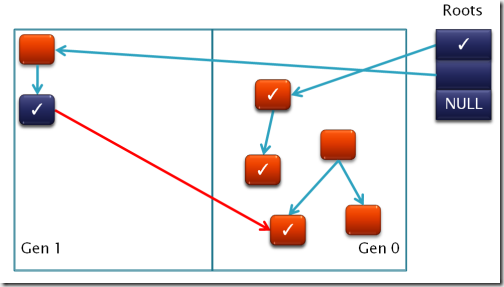

The whole purpose of segregating into generations is to reduce the number of objects to inspect for marking. So the first root is used for marking as it points to a Gen0 object. While using the second root the moment the marker sees that the reference is into a Gen1 object it does not follow the reference, speeding up marking process.

Now if we only consider the Gen0 objects for marking then we only mark the objects indicated by ✓. The marking algorithm will fail to locate the Gen1 to Gen0 references (shown in red) and some object marking will be left out leading to dangling pointers.

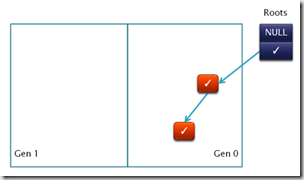

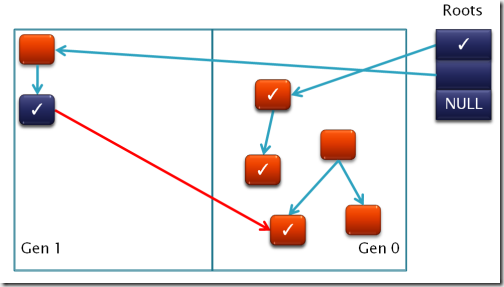

One of the way to handle this is to somehow record all references from Gen1 to Gen0 (way to do that is in the next section) and then use these objects as new roots for the marking phase. If we use this method then we get a new set of marked objects as follows

This now gives the full set of marked objects. Post another GC and promotion of surviving objects to higher generation we get

At this point the next cycle as above resumes…

Tracking higher to lower generation references

In general applications there are very few (some studies show < 1% of all references) of these type of references. However, they all need to be recorded. There are two general approached of doing this

Write barrier + card-table

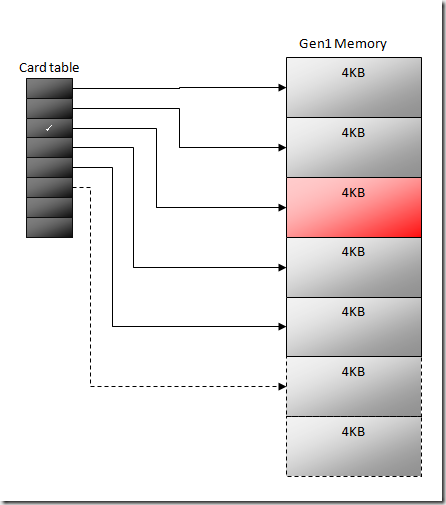

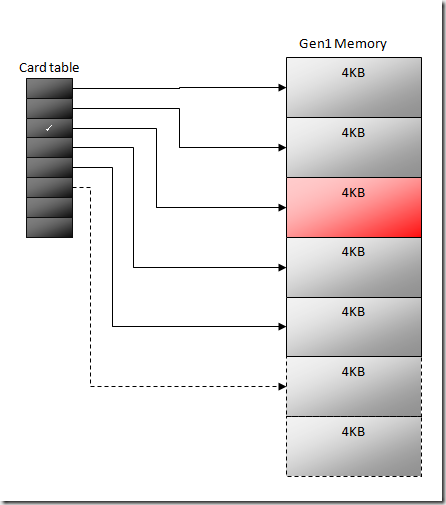

First a table called a card table is created. This is essentially an array of bits. Each bit indicates if a given range of memory is dirty (contains a write to a lower generation object). E.g. we can use a single bit to mark a 4KB block.

Whenever an reference assignment is made in user code, instead of directly doing the assignment it is redirected to a small thunk (incase .NET the JITter does this). The thunk compares the assignees address to that of the Gen1 memory range. If the range falls within, then the thunk updates the corresponding bit in the card table to indicate that the range which the bit covers is now dirty (shown as red).

First marking uses only Gen0 objects. Once this is over it inspects the card table to locate dirty blocks. Then it considers every object in that dirty block to be new roots and marks objects using it.

As you can see that the 4KB block is just an optimization to reduce the size of the card table. If we increase the granularity to be per object then we can save marking time by having to consider only one object (in contrast to all in 4KB range) but our card table size will also significantly increase.

One of the flip sides is that the thunk makes reference assignment slower.

HW support

Hardware support also uses card table but instead of using thunk it simply uses special features exposed by the HW+OS for notification of dirty writes. E.g. it can use the Win32 api GetWriteWatch to get the list of pages where write happened and use that information to get the card table entries.

However, these kind of support is not available on all platforms (or older version of platforms) and hence is less utilized.